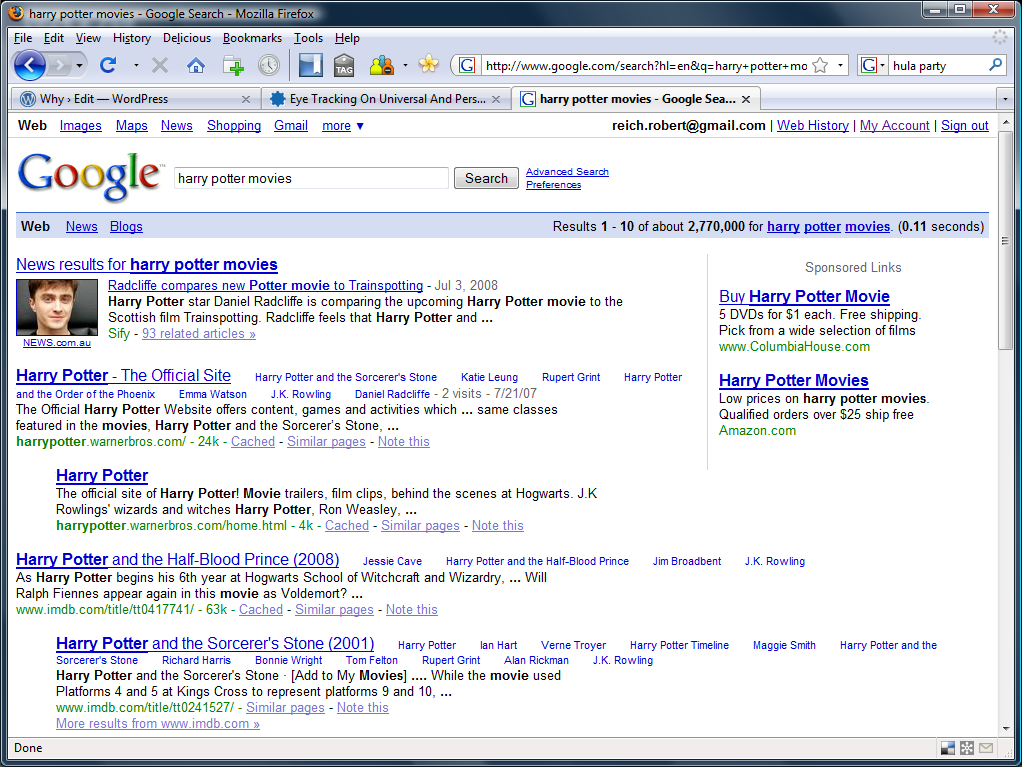

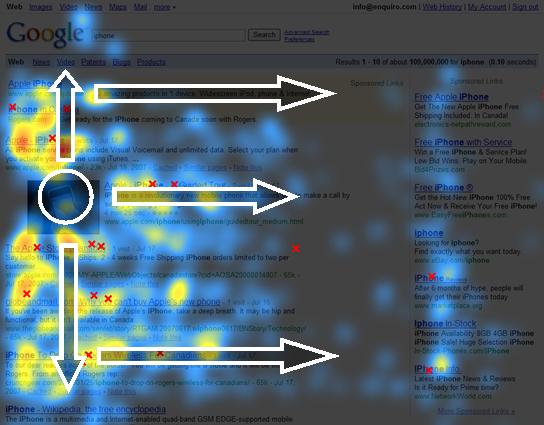

Intent is the holy grail of search. Crawlers and ranking algorithms are continuously being updated to try to squeeze more from the 2+ words people enter into a search box. Google has added web history within the past year, and they are getting much more

aggressive with attention data, but as of today no one is leveraging personalization (this feels like an opportunity for a startup).

The big 4 are talking about it. In a recent interview

"Search 2010: Thoughts on the Future of Search" , many of the participants

Marissa Mayer - Google,

Larry Cornett - Yahoo,

Justin Osmer - Microsoft and

Daniel Read – ASK, all stated personalization as one of the top areas for innovation over the next few years.

Why Is Personalization Important

The search problem is always fuzzy, a web search engine does not have enough information to return the perfect result and the perfect result for one person may be different than it is for another. For example, searching for the single word 'apple' at any of the top 4 web search engines would produce results that included 'Apple Computer', 'Apple Vacations' and 'Fiona Apple'. Depending on the intent of the user, the search term 'Apple' could be expanded to include 'Apple Washington State' or 'Apple IPhone' to produce a search result with significantly more relevant results.

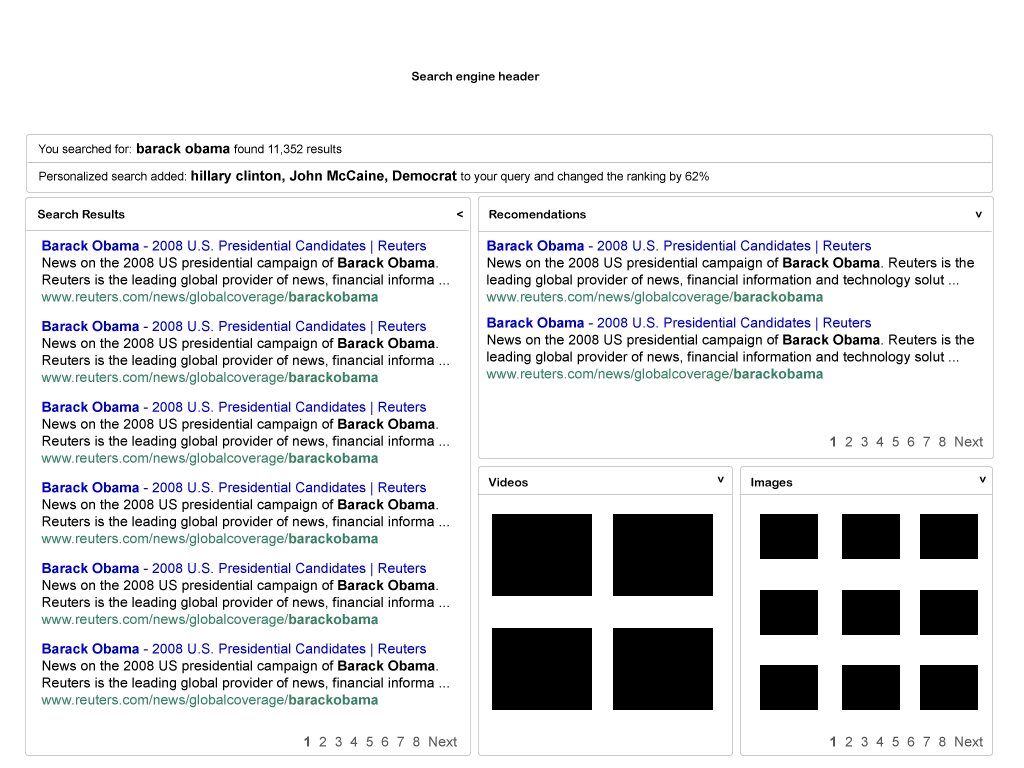

Personalization is one method for accomplishing this goal and if done correctly can significantly reduce the number of results a single user has to scan to find the correct information.

How Does Web Search Personalization Work?

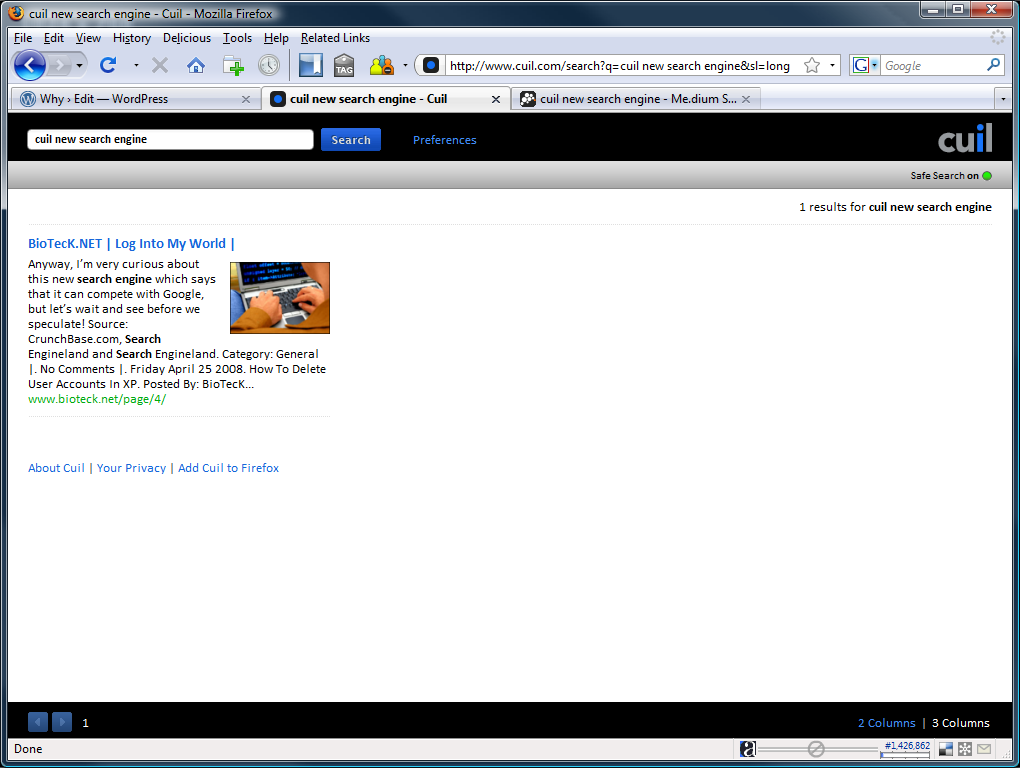

Personalization requires the user to give information about their likes and dislikes. This can be done explicitly like

Facebook during the sign up process, implicitly like

Google with search history and cookies or implicitly like

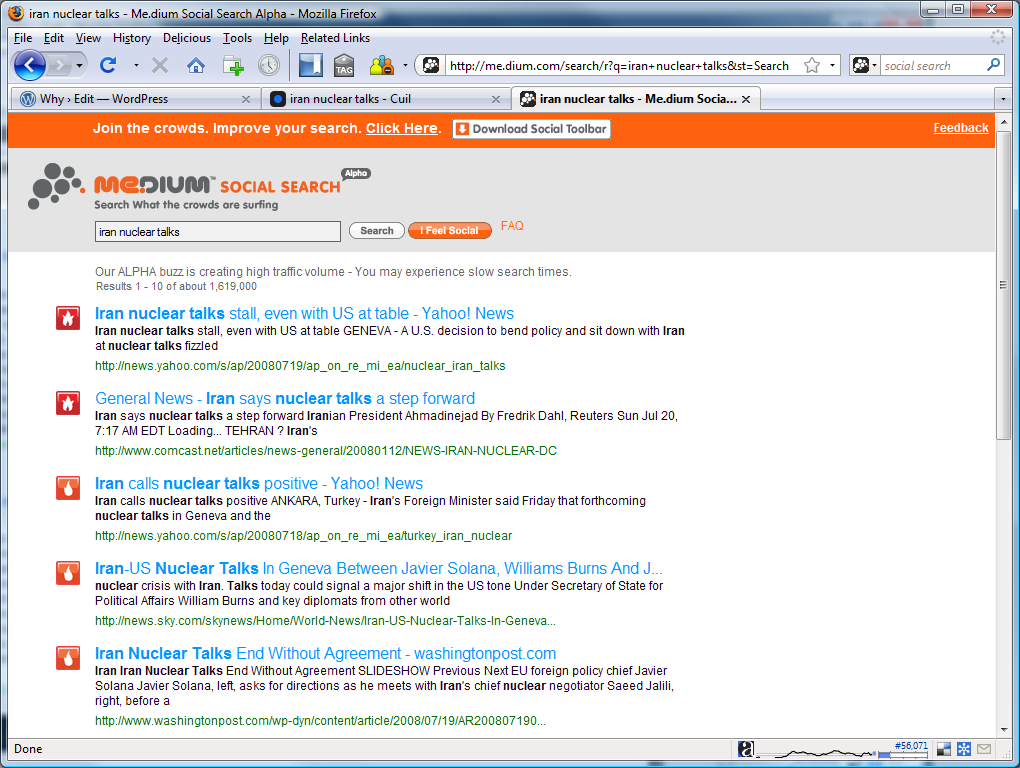

Me.dium with a browser extension.

Both explicit and implicit data collection can be misleading.

- To be Explicit, you would tell a web search engine all of your likes and dislikes. This would be time consuming, partially complete and out of date quickly

- Implicit data capture has a tendency to weight informal but highly repetitive actions as important

My experience has shown that combining the two yields the best results, because you are able to gather initial data about the users interests explicitly and then continually refine them implicitly based on their behavior.

Once a web search engine decides to personalize its results, rather then keep them consistent for all users, it must modify its core ranking algorithms and, in

Me.dium's case, also its crawling policies. Personalization, when done correctly feels like magic, when done poorly can be unbelievably confusing.

Let's review a few services that personalize results:

Amazon.com

Amazon uses implicit historical purchase data to recommend additional items.

"people who purchased this also purchased this"

Pandora.com

Pandora uses explicit songs and or band names to create custom radio stations

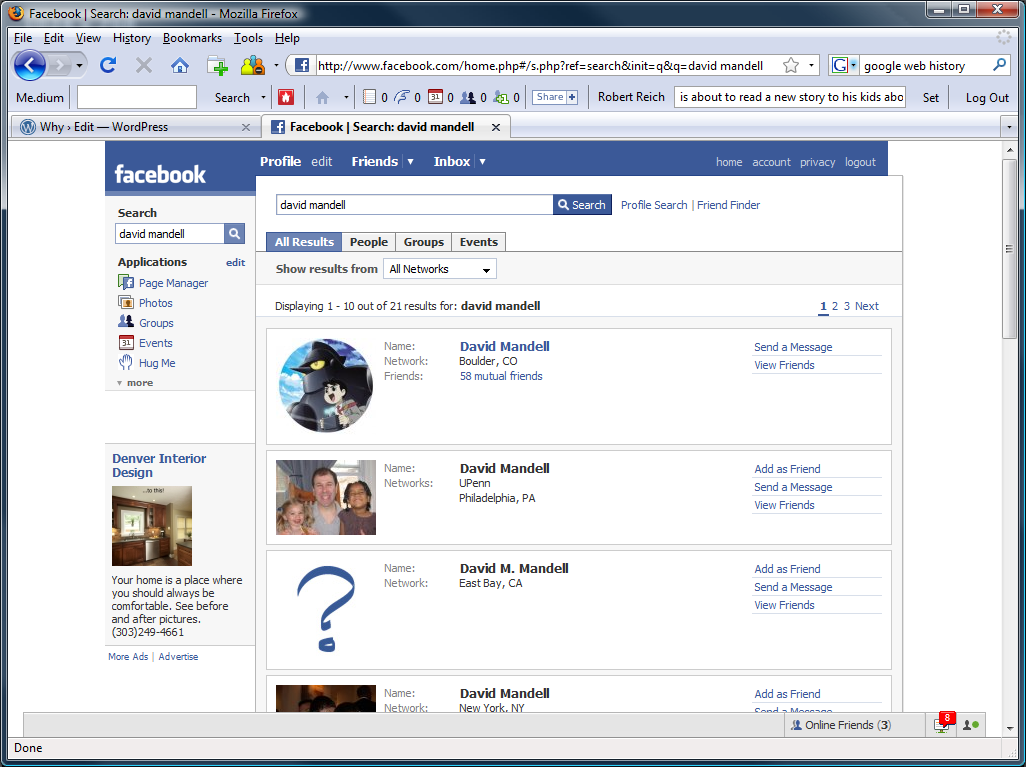

Facebook.com

Facebook uses explicit social graph data to assist in ranking people's search results

Why haven't the big 4 web search engines adopted personalization?

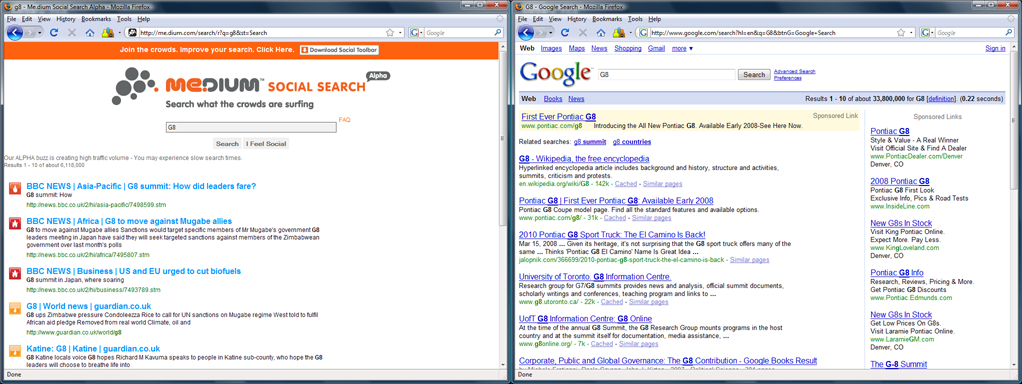

When I run a search using today's top 4 search engines I pretty much get the same answers from each. Try it I was personally surprised. Google, Yahoo, Live and Ask all use a publisher-centric model. This produces consistent results day-to-day, week-to-week and sometimes year-to-year. A query like 'Bill Clinton' produces results from his presidency, instead of his campaign issues with Hillary.

If I look at this from a financial and historical perspective, not from user value, I believe I understand how and why the web search industry has evolved. My hypothesis: consistent, non-personalized results were an appropriate way to monetize, and implement systems at scale. These challenges became the requirements for the systems we use today. I think we should label these web search engines as Stage 2. Stage 1 type systems were developed prior to

Google and

Teoma. Next generation web search engines, or shall we say Stage 3, will need to tackle the personalization challenge.